12 Principles of Modern Software Development

A deep dive into the "12 factor app"

Introduction

The 12 Factor App methodology was developed by Adam Wiggins and his team at Heroku, a cloud platform-as-a-service (PaaS) provider. Heroku initially introduced the 12 factors in 2011 as a set of best practices based on their experience of running and managing thousands of applications in the cloud. These principles have since gained widespread adoption in the software industry and have become a valuable resource for developers looking to build cloud-native applications.

Why do we need a 12-factor app?

By adhering to the 12 factors, developers can ensure their applications are flexible, efficient, and resilient, allowing for seamless scalability, easy deployment across various environments, and streamlined maintenance. With the 12 Factor App approach, developers can unlock the potential of cloud computing, enhance collaboration, and future-proof their applications for evolving technology landscapes.

Codebase

The first rule of the 12-Factor App methodology emphasizes the importance of maintaining a single codebase for your application. This means that multiple developers can work on the same codebase simultaneously, each on their own development environment. By doing so, they can collaborate efficiently and add new features to the application.

Consider a scenario where we have a web application, and in the future, we decide to introduce a delivery service as part of the same application. In the past, it was common to have a single codebase that encompassed all the application's features and services. However, following the principles of the 12-Factor App methodology, this approach is no longer recommended.

When multiple application services coexist within a single codebase, it transforms the application into a distributed system. According to the 12 Factor App guidelines, it is considered a violation to have multiple applications sharing the same codebase. To adhere to the methodology, each application service should have its own separate codebase.

Within each codebase, there can still be multiple deployments. This means that the same code will be used to deploy the application on different environments, such as development, staging, and production. By separating the application services into their own codebases while maintaining the same code for different deployments, developers can achieve greater modularity, scalability, and flexibility in managing their applications.

Explicitly declare & isolate dependencies

It is crucial to avoid relying on the implicit existence of system-wide dependencies. This means that an application should not assume that the required dependencies will already be installed on the system where it will be deployed.

Many applications have multiple dependencies that need to be installed before the application can run. For instance, in Python, these dependencies are typically listed in a file called "requirements.txt" (e.g., flask==2.0.0). Without specifying the version number, different developers may end up installing different versions of the dependencies, leading to inconsistencies when the program is executed in different environments. To address this, a best practice is to create an isolated environment for each application, including all necessary dependencies.

Python provides a concept called a virtual environment, which allows developers to create isolated environments with their own versions of dependencies. This ensures that external dependencies do not interfere with the application and that explicit dependencies and packages are consistently applied throughout the development, staging, and production environments.

However, there may be cases where an application relies on tools or configurations that exist outside of the Python virtual environment. For example, it might depend on curl commands or other system-dependent tools. To handle such scenarios in a universal manner across programming languages, Docker containers can be utilized.

Docker provides a platform-agnostic approach to containerization, allowing applications and their dependencies to be packaged into self-contained units called containers. These containers encapsulate the application, its dependencies, and any necessary system configurations. By leveraging Docker, developers can ensure that their applications have consistent and reproducible environments across different systems, regardless of the programming language used.

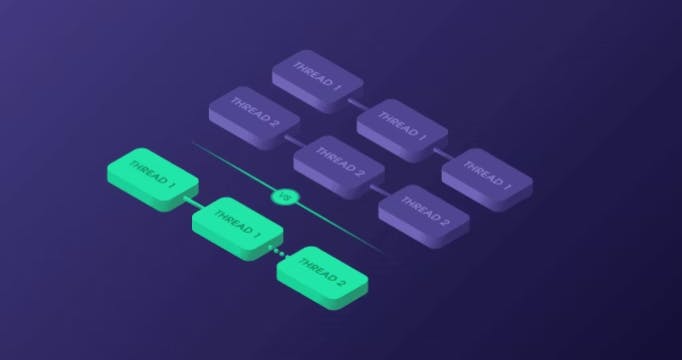

Concurrency

Once we have containerized our application and run it as Docker containers, we typically have a single instance or process of our application serving multiple users. However, as the number of users visiting our site increases, we need to ensure that our application can handle the additional load. Scaling up vertically by increasing the resources of the server is an option, but it comes with drawbacks. Vertical scaling often requires taking down the server, resulting in downtime, and there is a limit to the maximum resources that can be added to a single server.

Fortunately, with the advent of cloud computing, provisioning new servers has become incredibly fast and efficient. Within a matter of minutes, we can provision additional servers and spin up more instances of our application. To distribute the load across these instances, we can introduce load balancers. Load balancers evenly distribute incoming requests among the different instances of our application, ensuring optimal performance and availability.

However, for this scaling approach to work effectively, it is essential to build our application as an independent and stateless app. This means that the application should not rely on storing session state or other data on the server itself. Instead, all necessary information should be stored in a centralized and scalable data store or database. By following this principle of building stateless applications, we can easily scale our application horizontally by running multiple instances concurrently.

The 12 Factor App methodology considers processes as first-class citizen applications. It emphasizes the importance of designing applications that can scale out horizontally by running multiple instances simultaneously, rather than relying on vertical scaling. By adhering to the principles of the 12 Factor App, we can build applications that are inherently scalable, resilient, and able to handle increased user traffic with ease.

Processes

Consider a scenario where we want to add a feature to our application that displays a visitor count, incrementing each time a new user visits our website. Initially, this feature works well when we have only one process running because the visitor count is stored in the memory of that specific process. However, the problem arises when we have multiple processes running simultaneously.

Each process maintains its own version of the visitor count, leading to different numbers being displayed to different users depending on which process served their request. The same issue arises with user-session information. When a user logs into the website, certain session information, such as login details and expiration time, is stored in the process's memory or locally in the file system. If a future request from that user is directed to another process, their session information may not be available, resulting in the user being considered logged out.

Although load balancers can be configured to redirect users to the same process each time, known as sticky sessions, this approach still poses challenges. For instance, if a process crashes for any reason, all locally stored data will be lost. Therefore, sticky sessions are considered a violation of the twelve-factor principles and should be avoided.

To address this issue, it is crucial not to store any critical information in the process memory. Instead, such data should be stored in an external backing service that can be easily accessed by all processes. The external service should ideally be a reliable database or caching service like Redis. By storing session information and other data in an external service, all processes have access to the same set of data. It becomes irrelevant which process serves a particular user request since all the processes can retrieve the required data from the shared external service.

By following this approach and avoiding storing critical information in process memory, we ensure data consistency and eliminate the risks associated with process-specific data loss. By utilizing external backing services like databases or caching systems, we can achieve a scalable and resilient application architecture that aligns with the principles of the twelve-factor methodology.

Backing Services

When integrating external services like Redis into our application as a caching service, it is essential to treat them as "attached resources" following the principles of the twelve-factor methodology. Alongside Redis, there could be other similar services, such as an SMTP service for sending emails or integrating with S3 to store images. These services are considered backing services because they provide specific functionality that supports our application.

Regardless of where these services are hosted, whether it be locally, in a cloud environment, or as a managed service, our application should be able to seamlessly work with them without requiring changes to the application code. We should avoid having any code that is specific to a locally hosted service or a remotely hosted Redis service, for example. The application code should be written in a way that allows us to easily point it to a different instance or location of the service, and it should continue to function as expected.

By decoupling our application code from specific hosting or deployment details of the backing services, we achieve flexibility and portability. This means that we can switch between different instances or providers of the services without making modifications to our application's codebase. This decoupling allows us to adapt to changing environments, scale our application, and leverage managed services or cloud-based resources without disrupting the functionality of our application.

By treating these services as attached resources and ensuring our application code remains agnostic to their specific hosting details, we maintain a modular and portable architecture that aligns with the principles of the twelve-factor methodology.

Config

When our Python code contains hard-coded values for the Redis host and port, such as

redisDB="redis", host="redisdb", port="6380", it introduces challenges when deploying the application to different environments like production, staging, and development. Each environment may require different Redis instances with varying host and port values, leading to inconsistencies and errors during deployment. This practice is not considered best practice as it lacks flexibility and makes managing environment-specific configurations difficult.To address this issue, it is recommended to separate the environment-specific configurations from the main application code and keep them under version control. This can be achieved by defining a separate file, usually named

.env, to store these configuration settings. The Python code can then automatically load the data defined in the.envfile as environment variables. These environment variables can be accessed within the code to fetch the required configuration values.Following the twelve-factor app methodology, storing configurations in environment variables is considered a best practice. For example, we can retrieve the Redis configuration in the code using environment variables like this:

Redis(host=os.getenv('HOST'), port=os.getenv('PORT')). This approach enables us to use different configurations for different deployments, such as testing, staging, and production environments. It also facilitates the possibility of open-sourcing the project without exposing sensitive configuration details into the public domain.By adopting this approach of separating environment configurations into a

.envfile and accessing them through environment variables, we achieve greater flexibility, maintainability, and security. It allows for seamless deployment across different environments and avoids the need for code-level changes when switching configurations.

Build, Release & Run

In the context of the 12-Factor App methodology, rolling back recent changes without pushing another commit can be achieved through the clear separation of the build, release, and run stages.

The typical workflow begins with the development phase, where code is written in an IDE. However, the code in its text format is not directly runnable by end-users. It needs to be transformed into an executable format, such as a Docker image. This transition represents the development phase.

Moving on to the build phase, a build script is employed. In the case of a Docker image, the command "docker build" is commonly used, leveraging a Dockerfile. This process creates the Docker image for the application, including any necessary configurations for its specific environment. Once built, the resulting image, along with the configuration file, becomes the release object. Each release object is assigned a unique release ID. It is important to note that even minor changes, such as fixing a typo, should trigger the creation of a new release.

Finally, the run phase involves running the release object in its respective environment. The same build is used to run in different environments, ensuring consistency across the codebase. Any minor changes to the code would trigger a new build process, resulting in a new release and subsequent deployment.

This clear separation of the build, release, and run stages allows for easy rollback in case of issues or errors. If a need arises to revert to a previous version, it can be achieved by simply deploying the previous release object associated with the desired version.

By following this methodology, larger and more complex projects can effectively manage rollbacks without the need for an additional commit. It ensures that each release is versioned, allowing for reproducibility and traceability, and facilitates consistent deployments across different environments.

Port Binding

The 12-Factor App methodology is designed to be self-contained and does not rely on a specific web server to function. In traditional web applications, the application code is often tightly coupled with a specific web server, such as Apache or Nginx. However, the 12-Factor App approach decouples the application code from the underlying web server, making it more flexible and portable.

When running multiple instances of our application on the same server, each instance should be able to bind to a specific port, such as 5001 or 5002. Other services, such as Redis, may have their own port binding configurations, like Redis listening on port 6379.

To export HTTP as a service, our application binds to a specific port and listens for incoming requests on that port. This allows the application to handle HTTP requests independently without relying on a web server to route and process the requests.

The key distinction of a 12-Factor App is that it does not require a specific web server to operate. Instead, it implements its own mechanisms for handling incoming requests and responding to them accordingly. This self-contained nature of the 12 Factor App allows it to be more adaptable, scalable, and compatible with various hosting environments, such as cloud platforms and container orchestration systems.

Disposabilty

The twelve-factor apps are designed to be disposable, meaning they can be started or stopped at a moment's notice. When it comes to scaling a twelve-factor app, it should be capable of provisioning additional instances rapidly in response to increased demand, typically within a matter of seconds. To achieve this, the startup time of the app's processes should be minimized, avoiding reliance on complex startup scripts.

Similarly, when the load decreases, the app should be able to reduce the number of instances gracefully, terminating them when they are no longer needed. The processes within a twelve-factor app should be disposable, allowing them to be shut down gracefully in response to a SIGTERM signal from the process manager.

But why do we need a graceful shutdown process? When a Docker container receives a stop command (docker stop), Docker first sends a SIGTERM signal to the processes inside the container. If the container doesn't stop within a grace period, Docker then sends a SIGKILL signal to forcefully terminate the processes. The purpose of this two-step process is to allow the application to shut down gracefully.

During a graceful shutdown, the application can stop accepting new requests and complete any ongoing requests. This gives users sufficient time to finish their interactions with the app without disruptions. By handling the SIGTERM signal properly, the application can avoid unexpected data loss or resource leaks that may occur if the process is abruptly terminated with a SIGKILL signal.

By implementing a graceful shutdown process, a twelve-factor app ensures that users experience minimal disruption during scaling or termination events. It allows the app to handle ongoing requests appropriately and gracefully release any held resources before shutting down.

Dev/prod parity

In a typical software development lifecycle, there are three main environments where the application will be deployed: development, staging, and production. Each environment serves a specific purpose in the development and deployment process.

The development environment is where the application is built and tested by developers to iterate on changes and new features. It provides a controlled environment for developers to make modifications and test the application's functionality.

The staging environment is where the application is deployed and tested against a setup that closely resembles the production environment. This environment allows for comprehensive testing, including integration testing, performance testing, and user acceptance testing, to ensure that the application performs as expected before it is released to production.

Finally, the production environment is where the application is hosted and accessed by users. It is the live environment where the application serves its intended purpose and is accessed by the end-users.

Traditionally, transitioning an application from the development environment to the production environment could take weeks or even months. The process involved different teams responsible for coding, deploying, and managing production environments. It often led to time gaps between development and production, and changes made during that period could affect the functionality of the application.

This gap between development and production environments, along with potential tooling differences, created challenges and unexpected consequences during deployment. However, the 12-Factor App methodology addresses this issue by promoting continuous deployment and keeping the gap between development and production small.

The 12 Factor App encourages developers to resist the temptation to use different backing services or make significant changes between the development and production environments. By minimizing variations between environments, developers can reduce the chances of unexpected issues arising during deployment and ensure a smoother transition from development to production, and utilizing modern deployment tools and practices helps to minimize the gap, enabling faster and more reliable software releases.

Logs

Traditionally, applications followed different approaches for storing logs, such as writing logs to local files. However, this approach poses challenges in containerized environments, as containers can be terminated at any time, resulting in lost logs. Additionally, coding the application to write logs to specific files or file systems creates further limitations.

Alternatively, some applications attempt to push logs to a centralized logging system like Fluentd. While centralized log storage is encouraged, tightly coupling the application to a specific logging solution goes against the principles of the 12-Factor App methodology.

A 12-Factor App should not be concerned with the routing or storage of its output stream. Instead, it should write all logs to the standard output or to a local file in a structured format like JSON. This approach ensures logs are decoupled from specific files or logging systems. The logs can then be accessed by an agent or tool responsible for transferring them to a centralized location for consolidation and analysis.

By adopting this practice, a 12 Factor App promotes centralized log storage in a structured format. It allows for easier log management, analysis, and troubleshooting while maintaining flexibility in choosing the most suitable centralized logging solution for the specific deployment environment.

Admin processes

The 12 Factor App methodology recommends keeping administrative tasks separate from the application process. Specifically, it suggests running any one-time or periodic administrative tasks, such as database migrations or server restarts, as separate processes or applications. These tasks should be executed in an isolated and identifiable setup, ensuring automation, scalability, and reproducibility.

To accomplish this, the administrative tasks should ideally run on an identical system as the application deployed in the production environment. For instance, if the application relies on a Redis database, an additional Docker container can be spun up to connect to the same Redis database and execute the necessary reset script to reset the visitor count.

By isolating administrative tasks and treating them as separate processes or applications, the 12 Factor App methodology promotes a modular and efficient approach to managing administrative operations. This separation allows for better control, automation, and scalability of these tasks while maintaining consistency with the production environment.